Share

5 Myths of Digital Photography

Myths and misconceptions persist because they’re either compelling or no one bothers to correct them. For photographers, many myths of digital ph...

Myths and misconceptions persist because they’re either compelling or no one bothers to correct them. For photographers, many myths of digital photography arose when trying to draw imperfect analogies to film photography. Given the complex physics behind digital imaging, it’s not totally surprising that some myths persist, but here are a few you might want to be aware of.

ISO changes sensitivity

Unlike film, digital sensors have a single sensitivity. Changing the ISO on a digital camera doesn’t make the sensor more sensitive (i.e. capture more photons). Instead the camera amplifies a weak signal (gain) and the accompanying noise. It’s kind of like turning up the volume on a low quality audio recording. You can hear it, but it still sounds lousy.

[sciba leftsrc=”https://blog.photoshelter.com/wp-content/uploads/2016/09/DSC_6844-low.jpg” leftlabel=”ISO 12800″ rightsrc=”https://blog.photoshelter.com/wp-content/uploads/2016/09/DSC_6844.jpg” rightlabel=”ISO 100″ mode=”horizontal” width=””]

An easy way to illustrate this is to take a low and high ISO photo on a bright day. Even though there are plenty of photons to go around, using the high ISO makes the sensor capture less light by “fooling” each pixel into thinking its full when it’s not. As ISO increases, dynamic range decreases. Noise also becomes more apparent leading to poor image quality.

Some recent cameras have been dubbed “ISO invariant” meaning the sensor read noise is constant irrespective of ISO. This allows photographers to preserve highlights in wide dynamic range scenes and boost the shadows in post processing even though the initial image might look severely underexposed.

Bottom line: High ISO yields a noisier image because physics. New technologies like ISO invariance gives photographers more options to preserve dynamic range.

Higher bit-depth means better quality images

Bit-depth is related to the resolution of the analog-to-digital converter in your camera. The higher the bit-depth, the more the info from a pixel can be chopped into increasingly smaller units leading to smoother tonal transitions. If current cameras have 14-bit A/D converters that can address 16,384 levels why not build 16-bit or 24-bit converters for smoother gradations? Aside from the huge files that would result from more data, there is a point of diminishing returns with such a fine level of quantization because of noise.

Gah, noise! It’s everywhere. It starts with noise in the light that we’re trying to record when taking a photo (shot noise). It extends to noise introduced by circuitry at different points in the signal processing chain (read noise, dark noise, etc). If you try to slice your signal into smaller units (with more bit-depth) and those units are smaller than your noise, you’re not gaining any fidelity.

An imperfect analogy is why Olympic swimmers are only timed to 1/100th of a second. In a 50m freestyle, a 1/1000th of a second equates to about 2.39mm of travel. But Olympic pool regulations allow 3cm of variation per lane. So although timing devices can record more fidelity, you can’t guarantee that the silver medal hadn’t traveled farther than the gold. The variation in pool length is like noise. There’s no point in recording finer detail if you can’t get around the noise problem.

Bottom line: Wanting more bit-depth is like wanting more megapixels. If image quality is your ultimate goal, then there are more factors at play than a single variable.

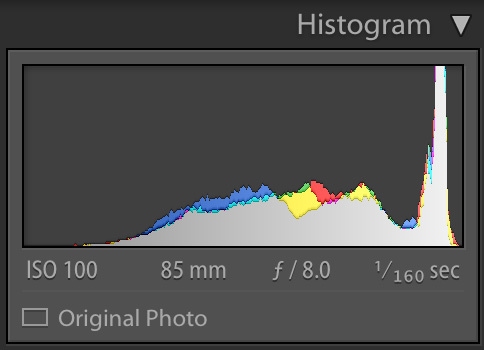

There is a perfect exposure for a given photo

No, but there is an optimal signal-to-noise ratio (SNR).

Are you trying to expose a backlit face or throw it into silhouette? The perfect exposure to a human is subjective, but from an electronics standpoint, you want to have the best SNR. It sounds really nerdy, but a strong SNR gives you the most latitude to post-process the image. This is especially true for photographers who ETTR (expose to the right).

Your light meter typically selects an exposure based on a 18% gray, which won’t necessarily correspond to ETTR.

DPReview’s Richard Butler writes, “once captured, the signal-to-noise ratio of any tone can’t be improved upon. It can get worse as electronic noise is added, but if you try boosting or pushing the signal, you end up boosting the noise by the same amount and the ratio stays the same. This is why your initial exposure is so important.”

Even though an ETTR image might look too bright, it’s actually better to record an optimal signal then take down the brightness (or curve adjustments) in post.

Bottom line: Want the best quality image from your equipment? Shoot RAW at your camera’s base ISO and ETTR.

The equivalent focal length lens on two different sensor sizes are not equivalent

With all the various sensor sizes, photographers seem obsessed with “equivalence” – how does this camera and lens compare to traditional 35mm? Most photographers know that if a sensor has a 2x crop size (Micro 4/3), you need to multiply the lens focal length to get the full-frame equivalent. Lesser known is that the aperture needs to be multiplied to get an equivalent depth-of-field (DOF). Tony Northrup explains:

Sensor affects the DOF with larger sensors yielding shallower depth of field. So to get the same DOF as a 200mm f/5.6 on a full frame camera, you’d need a 100m f/2.8 on a micro 4/3rd camera.

Bottom line: If shallow depth-of-field is your ultimate goal, choose a larger sensor with fast glass.

Larger pixels yield better image quality

In low light, it’s true that larger pixels typically have a higher SNR because they can capture more light. But larger pixels trade resolution (that is, the number of pixels on subject) for more light per pixel. Interestingly, it turns out in brightly lit scenes, smaller pixels have a higher SNR and better resolving power.

Although the sensors used in many types of astrophotography have larger pixel (the Kodak KAI 11002 used on the Atik 11000 is 9µM), most current full-frame DSLRs have settled to pixel size of about 5-6.5µM. By contrast, microscopy systems can have pixels sizes of 24µM, and Phase One’s 100MP back has a 4.6µM size. Camera manufacturers select pixel size for specific applications and there are always trade-offs to be made.

Phase One’s 100MP back has modestly sized pixels, but a gigantic sensor which captures much more light yielding higher image quality.

As you can see in the above chart, pixel size is not a good determinant of image quality except in low light applications.

Sensor size and aperture (and the resulting etendue) are the better predictor of image quality. Simply stated, at a given focal length and f-stop, a camera with a large sensor gathers much more light than a smaller sensor. More light, more signal. Better SNR, better image quality.

Many medium format shooters claim that larger pixels, greater bit depth, and higher resolution yield better quality images. More likely is a larger sensor combined with larger entrance pupils (and glass designed for those sensors) capture much more light than 35mm at the same focal length and exposure.

Bottom line: Don’t worry about the size of your pixels. It’s what you do with them that matters.