Share

How Computational Photography is Changing Your Conception of Photography

In the era of film, photography was largely relegated to the recording of light via optical processes. Although specialized gear like the strip cam...

In the era of film, photography was largely relegated to the recording of light via optical processes. Although specialized gear like the strip camera challenged the orthodoxy of the single exposure, film photography (even in the art photography world) was a fairly literal way of seeing the world.

But in digital photography, light is converted into bits of data, and increasingly powerful microprocessors and software algorithms allow us to alter that data in ways that would have been impractical or impossible with optics alone. The future of photography is software, and the future has already arrived.

In some ways, the advent of computational photography brings about enormous creative potential. In other ways, it represents an ethical hornets’ nest during the time of fake news where the public is increasingly skeptical of the information they are consuming – whether news or otherwise.

Computational photography is already all around us. Let’s take a look!

It lives!

Panoramas

Creating panoramic images in the digital age used to require laborious and often inaccurate Photoshop workflows. Better algorithms and faster processors led to more automation post capture, but the current crop of smartphones can stitch on the fly – not only creating seamless pixel stitching and correcting for parallax distortions, but also compensating for significant variations in lighting.

HDR

Photographers like Trey Ratliff made their names refining High Dynamic Range photography, often with illustration-like results. The original technique required bracketing an exposure then creating a multi-layer file. Today’s smartphones automatically switch on HDR modes when high dynamic range scenes are detected helping photographers save their highlights while preserving their shadow detail.

Optical Aberrations (distortion, vignetting, chromatic aberration, and even blur)

Early adopter photographers champ at the bit for the newest release of Adobe Camera RAW for a simple reason: new lens profiles allow for automated corrections of optical distortions. Does your wide angle lens suffer from barrel distortion? Maybe that new telephoto has too much vignetting for your tastes. Software allows for very realistic corrections that can turn a $300 lens into what looks like a $1000 lens.

Noise reduction

Modern image processing software like Photoshop and Lightroom have built in noise reduction controls which often work by examining adjacent pixels. But in low light photography, using a technique called median blending allows the magic of math to exponentially lower noise.

In low light situations, the signal (i.e. light) is low compared to noise, which can arise from a variety of sources including shot noise (the inherent randomness of photons) and photodiode leakage.

But if you take the median value of a pixel from multiple images, you can reduce the noise by the square root of the number of images. Nine images reduces noise by 3 times! This technique is employed by the Google Pixel phones in HDR+ mode, and is also a technique used by astrophotographers.

4D Light Field (plenoptic photography)

Traditionally photography merely captures the intensity of light. But researchers have been playing around with capturing additional “dimensions” of data to add more control over the demosaicing process (turning RAW data into a visible image). The Lytro Light Field Camera inserted a microlens in front of the camera sensor, allowing it to capture directional information about light rays. This added dimension allowed the software to refocus the image computationally in post production.

Increasingly, cameras will capture more dimensions of information. Active depth sensing is an obvious candidate that will dramatically increase everything from creative photography to security (e.g. using your face as an ID).

Multi-sensor cameras

One of the many reasons why interest in consumer photography has grown so rapidly in the past year is the miniaturization of lens and sensor arrays which allowed high quality cameras to be built into smartphones. But miniaturization is not without it’s issues, and as far as photography is concerned, building telephoto capabilities is difficult given the thickness of the phone. Folded lenses assemblies is one way to cheat the thickness problem. Light Co’s ambitious L16 doesn’t just use a single folded lens, it uses an entire array of different focal lengths allowing the camera to computational construct an image from multiple sensors.

On the iPhone 7 Plus, the dual camera allows the software to simulate a shallow depth-of-field.

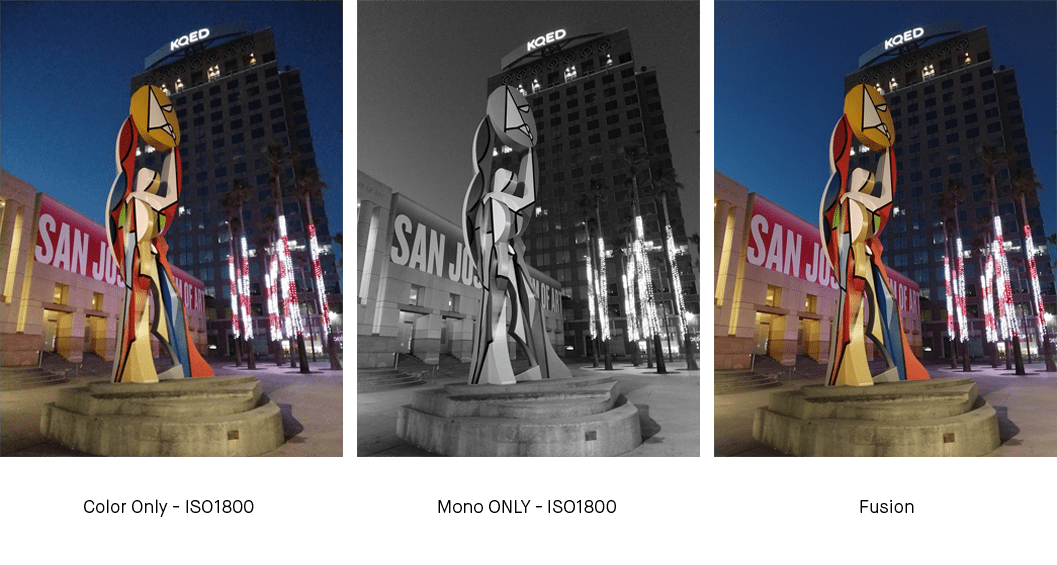

Photo: Essential

The Essential Phone combines data from a monochromatic sensor with an RGB sensor to reduce noise.

Futurama

Real-time auto-enhance

AI has rightfully become the soup du jour in technology circles. Apocalyptic visions aside, AI’s ability to learn and become “smarter” over time bodes well for the increasing automation of laborious tasks. Why bother with an Instagram filter when AI can learn what you like and apply it automatically?

Computational Zoom

Should photos capture exactly what the eye can see, or should it capture what the mind remembers? This philosophical (and ethical) quandary will be pondered while researchers Abhishek Badki, Orazio Gallo, Jan Kautz, Pradeep Sen fine tune their software for altering foreground and background perspectives within the same image.

Flash photography enhancement through intrinsic relighting

Photos of birthday cakes topped with lighted candles rarely capture both the subtle glow along with the shadow detail that our eyes capture. Whereas HDR photography simply combines different luminance values, researchers Elmar Eisemann and Frédo Durand are examining features like ambiance and color/shape detail to computationally render a more eye-pleasing image.

http://people.csail.mit.edu/fredo/PUBLI/flash/

Computational Bounce Flash for Indoor Portraits

Sometimes you can’t avoid using a flash. But placing that flash in the right place to eliminate the deer-in-the-headlights look while creating a more pleasing lighting style can be difficult in a run-and-gun situation like a wedding. So researchers Lukas Murmann, Abe Davis, Jan Kautz and Frédo Durand built a flash mounted to a motor connected to an electric eye that can calculate the best direction to bounce light based on training data about what makes a good bounce flash image.

Digital photographer as an analogue to film photography has matured. Everything we could do on 35mm film, we can now do better on digital for cheaper and without a toxic bath of chemicals. Thus we have arrived on the doorstep of an exciting new chapter in photography where many of the paradigms of how a photo is made and what data it represents will be redefined by computational photography.